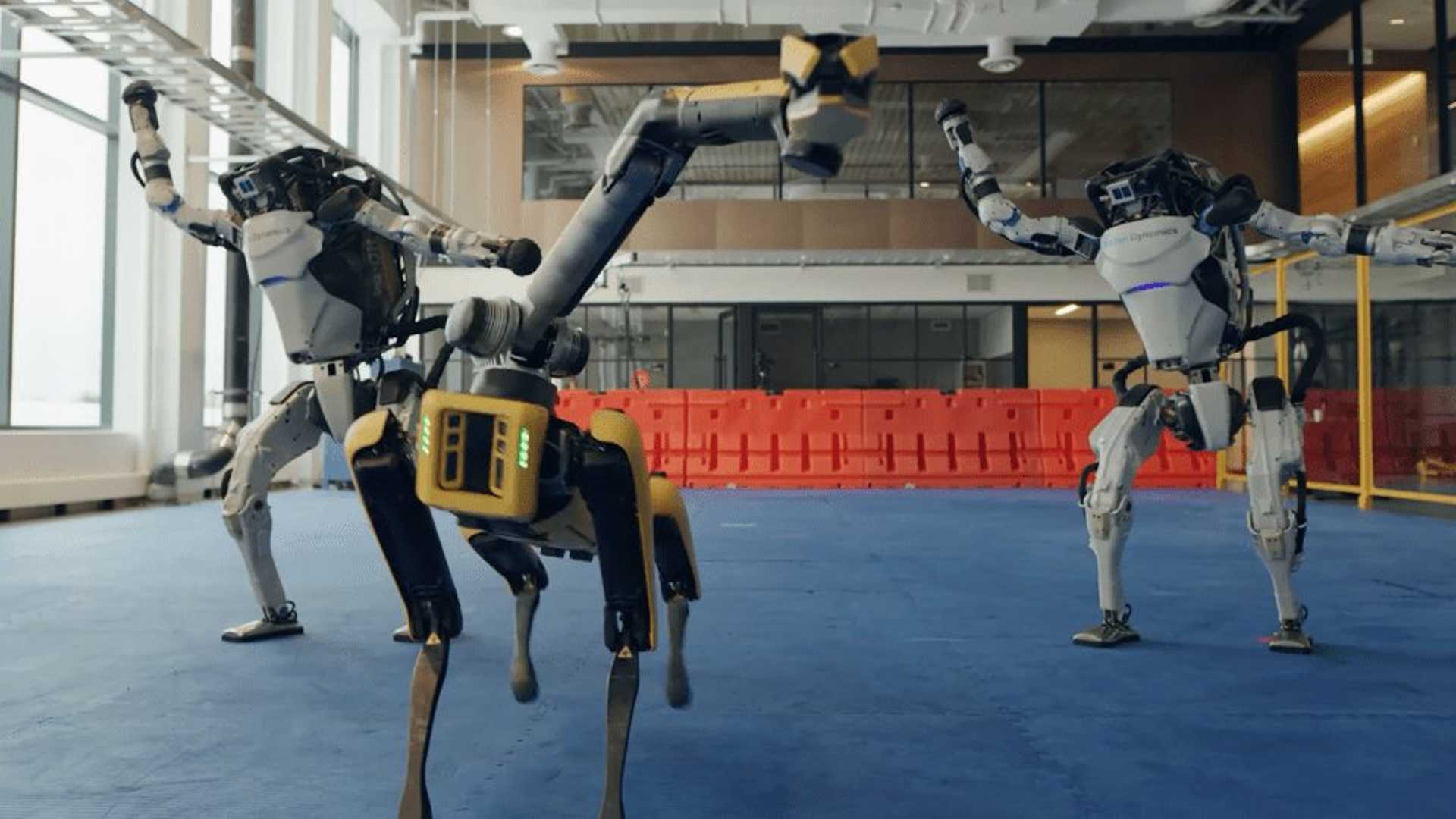

It gave a playful vision of robots and artificial intelligence, a sharp contrast to the bleak vision cultivated in so many works of fiction, such as Phillip K. Dick's novel Do Androids Dream of Electric Sheep?

The video was well conceived, because dancing is precisely the kind of action that we do not associate with robots. In many ways, dancing is play. The word “robot”—invented by Czech artist Josef Čapek and introduced to the world by his brother, writer Karel Čapek—is derived from the Czech “robota”, meaning “forced labour.” As such, humans envision robots doing work; not the Mashed Potato or the twist. But no matter how many jokes we crack about the Atlas robot dancing better than some of us ever will, the concept of non-biological entities expanding their capabilities is controversial. Further reactions to the dancing video ranged from disbelief to despondence (“We're doomed.”)

Are fears of robots and artificial intelligence just paranoia?

This has been one of the primary focuses of Jaan Tallinn, co-founder of Skype, who has most recently split his time between philanthropy and investing. In a conversation with the Future of Life Institute, Tallinn said, “most of my support goes to organizations and people who are trying to think about ‘how can I do AI safely?' In some ways [doing] the homework for people who are developing AI capabilities.” By investing in AI companies, he has endeavoured to inform companies of the risks involved and to bring together people developing AI with those who are skeptical of it.

To be clear, a robot doesn't necessarily possess artificial intelligence; it can function on the direct commands of engineers alone. Likewise, artificial intelligence doesn't have to take a physical form. It can be contained within a computer, whether it's the “brain” of a self-driving car, Siri, or HAL 9000.

Jaan Tallinn lists artificial intelligence as one of his top three long-term existential fears for the world, along with synthetic biology and “unknown unknowns” (i.e. “things that we can’t perhaps think about right now”). So what are the real risks created by artificial intelligence, whether it has a physical form or not?

In 2012, Tallinn, along with Huw Price and Martin Rees, founded the Centre for the Study of Existential Risk (CSER) at the University of Cambridge. Among CSER's primary messaging, they describe how breakthroughs in image and speech recognition, autonomous robotics, language tasks, and game playing will bring about “new scientific discoveries, cheaper and better goods and services, [and] medical advances.” However, they also raise concerns around “privacy, bias, inequality, safety and security.” For instance, AI labour in industrial settings could fail to react to conditions that cause accidents. The centre also alludes to AI becoming a competitive weapon used by military powers. For this risk, Tallinn has argued that humanity would benefit from delaying the use of AI entirely in the military.

Another common concern about the continued development of artificial intelligence is its ability to replace humans in an increasing number of jobs, just like factories with machines replaced cottage industries during the Industrial Revolution. Indeed, if artificial intelligence can exceed the output of human labourers in terms of quantity and quality, or machines are imparted with much greater strength and physical ability, what is the incentive to keep employing those humans?

Any of these risks becomes amplified when these entities can think and act on their own, without our species directly operating or programming them.

This kind of fear and concern is not always appreciated. As reported by CNBC in 2020, Tesla and SpaceX CEO (and also a CSER adviser) Elon Musk has irritated a number of researchers in the field by stating how AI is “potentially more dangerous than nukes.”

Pondering worst-case-scenarios and risks may delay the engineers who advance AI and robotics, but it's the colliding of altering viewpoints that will increase the likelihood of ethical, secure AI developments.

This is to say that caution and progress are not incompatible. Jaan Tallinn has expressed that as we introduce new non-human entities into society, “our job is to make them care about the rest of civilization…” If humanity can make artificial intelligence feel as well as it can program robots to dance, we'll gain much more than solely enlisting AI to do work.